Algorithms, Results & Analysis

At the very begin, after thorough analysis for the task and the given dataset, we attempted to use the built Decision Tree (ID3) in assignment 1 firstly. It is a non-parametric supervised learning method used for classification and regression. The goal is to create a model that predicts the value of a target variable by learning simple decision rules inferred from the data features. ID3 (Iterative Dichotomiser 3) was developed in 1986 by Ross Quinlan. The algorithm creates a multiway tree, finding for each node (i.e. in a greedy manner) the categorical feature that will yield the largest information gain for categorical targets. Trees are grown to their maximum size and then a pruning step is usually applied to improve the ability of the tree to generalise to unseen data.

In this experiment, we adjusted training data size from 500 to 20,000 to test the prediction accuracy of such model. And the results are shown as following table. From the results table, it is easy to see that the best accuracy with pruning is about 85.88%. As far as we are concerned, the reason why the accuracy is only 85% may be the processing for “continuous” variables is somewhat unreasonable. In addition, ID3 is not suitable to deal with the “continuous” attributes.

After that, we used another DT algorithm named C4.5 in WEKA to train the model. C4.5 is the successor to ID3 and removed the restriction that features must be categorical by dynamically defining a discrete attribute (based on numerical variables) that partitions the continuous attribute value into a discrete set of intervals. C4.5 converts the trained trees (i.e. the output of the ID3 algorithm) into sets of if-then rules. These accuracy of each rule is then evaluated to determine the order in which they should be applied. Pruning is done by removing a rule’s precondition if the accuracy of the rule improves without it. Here, we did not change its parameters. And the prediction accuracy was up to 90.95%. We consider it reasonable as such algorithm is better to tackle the “continuous” variables and maybe its default parameters could boost the effect of model more than the parameters chosen in ID3.

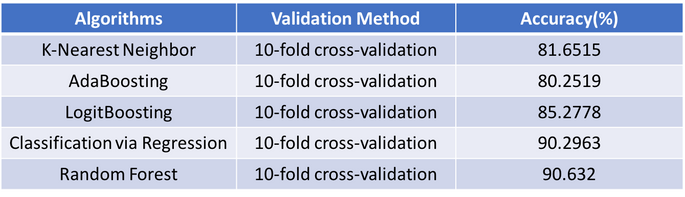

At this point, we decided to try some other classification methods except for Decision Tree. We investigated some algorithms in WEKA including Random Forest (RF), Ada Boosting (AB), K-Nearest Neighbor (KNN), Logit Boosting (LGB), and Classification via Regression (CVR). When training, we almost left all parameters as default, and the results are shown as the following table.In the next part, we would analyze these algorithms individually.

-

K-Nearest Neighbor (K-NN) is a non-parametric method used for classification and regression. In KNN classification, the output is a class membership. An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its K nearest neighbors. It is a type of instance-based learning, or lazy learning, where the function is only approximated locally and all computation is deferred until classification. The training examples are vectors is a multidimensional feature space, each with a class label. The training phase of the algorithm consists only of storing the feature vectors and class labels of the training samples. A commonly used distance metric for continuous variables is Euclidean distance. As for result, it only came up to 81.6515% when we set K=30. We deem that it might be due to classification scoring is unreasonable and our dataset is not balanced enough for such algorithm to classify.

-

Ada Boosting training process selects only those features known to improve the predictive power of the model, reducing dimensionality and potentially improving execution time as irrelevant features need not be computed. A boost classifier is a classifier in the form , where each ft is a weak learner that takes an object x as input and returns a value indicating the class of the object. The Tth classifier is positive if the sample is in the positive class and negative otherwise. Each weak learner produces an output hypothesis, h(xi), for each sample in the training set. At each iteration t, a weak learner is selected and assigned a coefficient αt such that the sum training error Et of the resulting t-stage boost classifier is minimized. As for the accuracy of Ada Boosting, 80.2519% is barely satisfying but there is still great potential to improve. We suggested that it might be limited data size that contributed to such result.

-

Logit Boosting is a boosting algorithm formulated by Jerome Friedrman, Trevor Hastie, and Robert Tibshirani. Specifically, if one considers AdaBoost as a generalized additive model and then applies the cost functional of logistic regression, one can derive the LogitBoost algorithm. LogitBoost can be seen as a convex optimization. Specifically, given that we seek an additive model of the form , the LogitBoost algorithm minimizes the logistic loss: . The final model can be a weighted sum of your weak models. With LogitBoost, we can get better results since it can reduce bias as well as variance. Hereby, we got the result of 85.2778%, which is better than AdaBoost.

-

Classification via Regression is a machine learning algorithm that builds regression models to predict for the positive class whether a particular example belongs to it or not. The probabilities are generated by transforming the predictions with a softmax. And the accuracy of such method in WEKA is up to 90.2963%, which is relatively satisfactory compared with other algorithms.

-

Random Forest is an ensemble learning method for classification, regression and other tasks, that operate by constructing a multitude of decision trees at training time and outputting the class that is the mode of the classes or mean prediction of the individual trees. Such method corrects for DT’s habit of overfitting to their training set. The training algorithm for random forests applies the general technique of bagging to tree learners. It uses a modified tree learning algorithm that selects, at each candidate split in the learning process, a random subset of the features. Back to our model, the accuracy was up to 90.632%, which is the highest among all the used algorithms.

All in all, based on the aforementioned analysis, random forest and classification via regression are suitable algorithms with high-efficiency and good-effects for our task.

After discussion with instructor, we added “attributed_time”, along with “is_attributed”, as our output labels for both training and testing. We make this adjustment because although the “attributed_time” is only available to clicks that eventually download the app, the information it carries is still worth considering, and this info might be able to help us further improve the accuracy of our classification. Our idea is: we could regard all of the features mentioned above as numerical variables, and input them into a neural network to predict the corresponding attributed time. For the training data, even though the users not downloading the app don’t have any available value of “attriubted_time” (NaN given), we could manually assign them a value that makes sense and could properly reflect their status. According to the dataset mentioned above, the “attributed_time” for downloading users ranges from 0 to at most 70000+ seconds counting from 2017-11-6, 00:00:00. Therefore, it is natural way to set the “attributed_time” to infinity (a very large number) to denote that the user has never downloaded the app. So as a final step of processing the data for our following algorithm, we let all the users without “attributed_time” get a large value of 1000000, which is far larger than all the existing download time provided.

After finalizing the small changes to dataset, we applied MLP regressor model in sklearn packet using python, to predict the attributed_time of users in the testing set. As is discussed above, we utilized “train_test_split” module to randomly pick a 3:1 ratio of training and testing set from the original data set. And after retrieving the result value of attributed_time, we used a threshold of 750000 to determine whether the app is downloaded: If predicted value of “attributed_time” is greater than 750000, we let “is_attributed” = 0; Otherwise, we assign 1 to “is_attributed”. We compare the predicted class with the true result given in testing data, and the overall accuracy retrieved can only reach approximately 85%.

In view of this result, we adverted to MLP classifier to see, if we do not consider “attriubuted_time” and only make classification of “is_attriubuted” based on the previous features, how will this model perform in terms of accuracy. After applying this model, we could get an accuracy at most 88% (86% in average). But in this process we discovered some interesting outcomes worth delving into: From the confusion_matrix of MLP classifier, we could see that a 92% precision is achieved when the original class of “is_attriubuted” is 1. On the other hand, when the original class is 0, the precision of MLP classifier will decrease to 82%. An average of these two cases has led to the final 86% accuracy in this model. So here comes another possibility: we could integrate MLP regressor and MLP classifier, combine their algorithm together and keep their components with high precision to find our prediction result.

Our modified algorithm is: first use our previous MLP regressor and MLP classifier model to train on the same dataset in parallel. Then, apply the two trained models on the testing set to get their results for each of the example respectively. For each testing example, MLP classifier will directly return its predicted class Nc, and MLP regressor will return the attributed_time Tr . If Tr is greater than 750000, we let the class given by MLP regressor be 0, otherwise the class given by regressor will be assigned 1. We denote the finally decided class by MLP regressor as Nr, and consider the results of Nc and Nr under different conditions:

1) If Nc = 1, since MLP classifier can get 92% precision on the original examples with real class = 1, we simply let our final prediction of “is_attributed” be 1. This could at least guarantee 92% accuracy for this proportion of testing examples.

2) If Nc != 1, then we compare Nc and Nr altogether. If Nc = Nr = 0, then we could assume their predictions are reliable, and we can let our final prediction of “is_attributed” be 0.

3) If neither 1) nor 2) are satisfied, i.e. Nc = 0 and Nr = 1, we know from the confusion matrix that the MLP classifier would misclassify approximately 20% of the examples with class 1 to class 0. Thus, we need to utilize the result of MLP regressor to deal with these examples. Our method is as follows: for the examples fallen in case 3), if the prediction result of “attributed_time” from MLP regressor, Tr is less than 300000, then we let our final prediction of “is_attributed” be 1. Otherwise, we assume the result of MLP classifier is correct, and let our final classification of the example be 0.

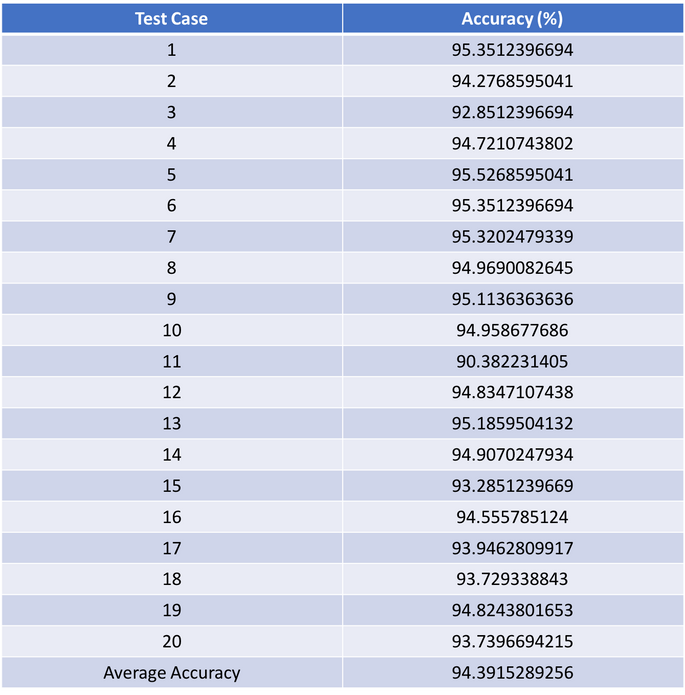

Consequently, with our combination of MLP regressor & classifier model discussed above, we individually ran the algorithm for 20 times on the original dataset. Each time the training set and testing set are selected randomly, and the table below shows the resulting accuracy on the testing set. The highest reachable accuracy of them is 95.5%, and the average of these 20 repeated testing cases is 94.4%.